Find the terms by letter

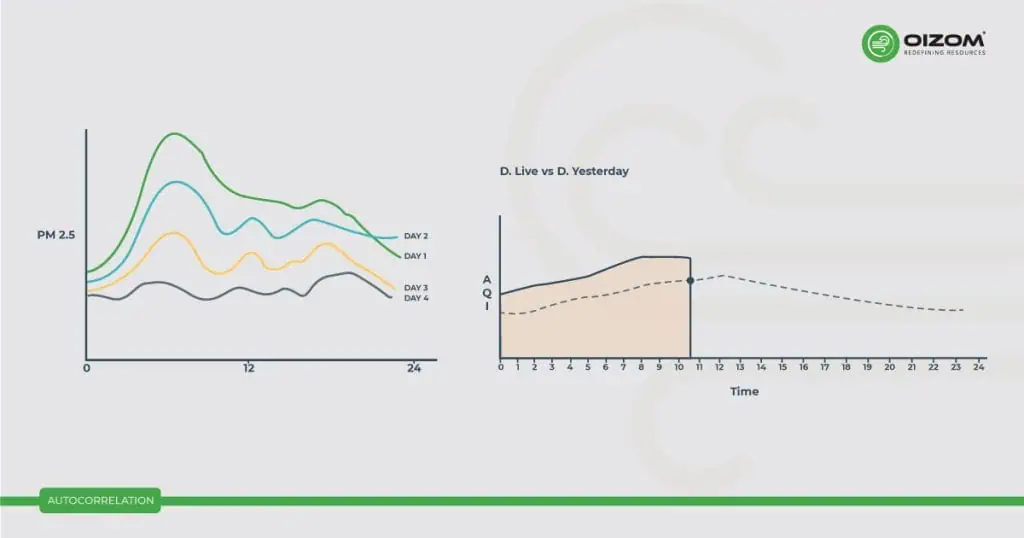

Autocorrelation

Definition

“Autocorrelation, also known as serial correlation, is a statistical concept that measures the degree to which a time series or a signal is correlated with a lagged version of itself. In other words, it assesses the correlation between data points at different time intervals within the same series.

When the autocorrelation is high at a specific lag, it indicates that there is a repeating pattern or relationship between data points separated by that time lag. Autocorrelation is often used in time series analysis to identify trends, seasonality, or periodic patterns in data. It is a valuable tool in fields such as statistics, signal processing, and econometrics for understanding and modeling temporal dependencies in data.”

Definition and Description

“autocorrelation, also called serial correlation, is a statistical concept used to quantify the extent to which a time series or a signal correlates with a delayed or lagged version of itself. In simpler terms, it’s a way to measure how related data points at different time intervals are within the same dataset.

When autocorrelation is high at a specific time lag, it signifies that there’s a recurring pattern or connection between data points that are separated by that particular time gap. Autocorrelation serves as a crucial tool, especially in the realm of time series analysis, where it helps identify trends, seasonal variations, or repetitive patterns in the data. This concept finds applications in various fields such as statistics, signal processing, and econometrics, enabling a better understanding and modeling of temporal dependencies in data.”