Find the terms by letter

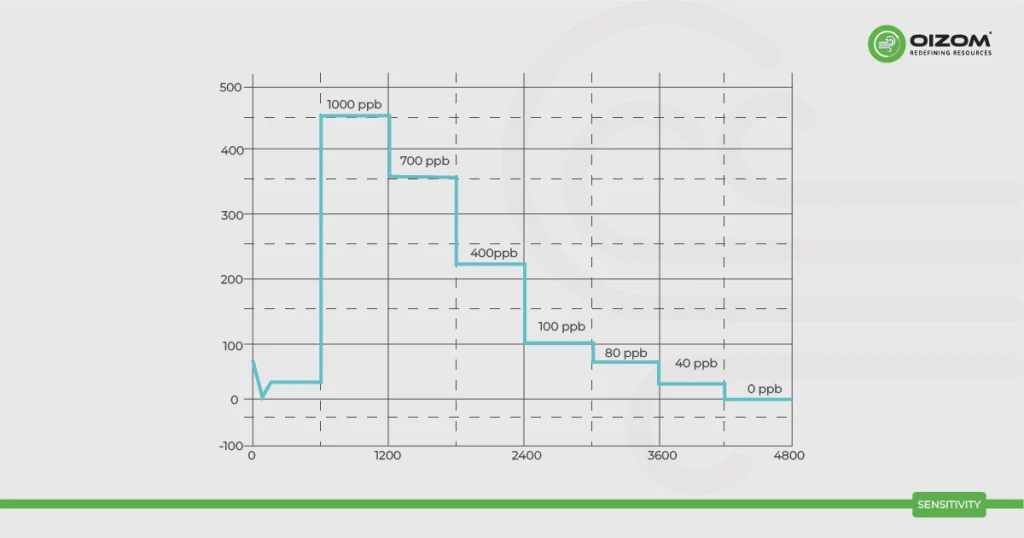

Sensitivity

Definition

A sensor’s sensitivity indicates how much its output changes when the input quantity it measures changes. For instance, if the mercury in a thermometer moves 1 cm when the temperature changes by 1 °C, its sensitivity is 1 cm/°C (it is basically the slope dy/dx assuming a linear characteristic).

Definition and Description

Sensitivity of a sensor is a measure of how responsive it is to changes in the quantity it is designed to measure. For example, if a thermometer’s mercury column moves 1 centimeter when the temperature changes by 1 degree Celsius, its sensitivity is quantified as 1 centimeter per degree Celsius (1 cm/°C). In essence, sensitivity reflects the rate of change (akin to the slope dy/dx in a linear relationship) in the sensor’s output concerning variations in the input parameter it is monitoring.